MCP is a relatively new protocol, introduced by Anthropic, that aims to simplify and standardize how large language models (LLMs) interact with external data. These days, it’s also the hottest topic on X and several AI-related forums.

I want to start by emphasizing that the model context protocol is an open-source protocol. It’s not limited to Claude. Anthropic, it seems, wants broad adoption and contributions from the community.

But right now, Claude Desktop is the gold standard for MCP integration. It supports MCP servers with minimal setup… you just edit a claude_desktop_config.json file, and you’re connected. It’s perfect for non-coders and quick tasks.

Other clients like Windsurf, Cursor, and Cline are emerging, and it’s probably a good idea to keep an eye on them too.

Let’s dive a bit into the protocol itself now.

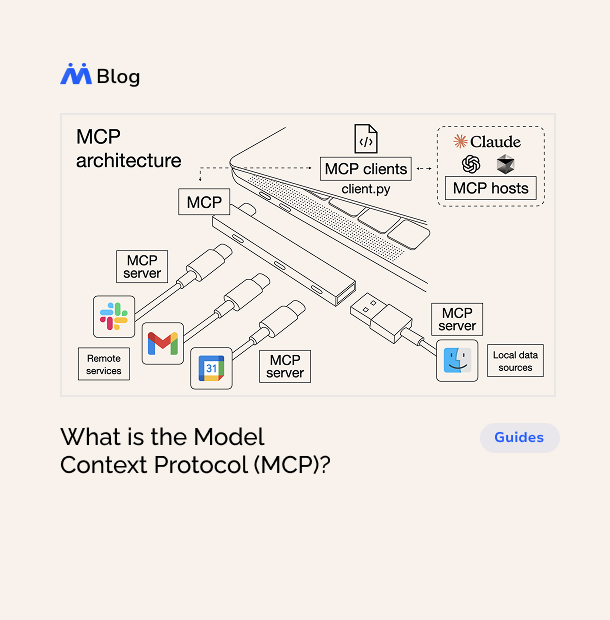

We need to think of MCP as a universal adapter that lets LLMs access real-world data (like files, databases, APIs, and services) in a consistent, scalable way.

Claude and ChatGPT are powerful Large Language Model, but have limited to their training data and user prompts. They can’t natively access live data (like a GitHub repository or the current weather) or perform actions (say, run a script) without external help.

In the pre-MCP era, this limitation was overcome by writing custom one-off scripts. These were usually clunky, unstable, and inefficient. MCP is trying to eliminate that chaos.

MCP operates on a client-server architecture, splitting responsibilities between two main components: MCP servers and MCP hosts.

Full Stack Developer Roadmap: Your Complete Guide to Becoming a Pro 2025

© Copyright 2022 Jellywp. All rights reserved powered by Jellywp.com

Share

Related Articles

Guides  metagiik2 Mins read

metagiik2 Mins read

You Can Read Any of These Short Novels in a Weekend

What’s made Amazon shoppers fall in love with Tozos? Superior audio quality,...

codingGuidesRoadmap  metagiik4 Mins read

metagiik4 Mins read

Your Ultimate Full Stack Developer Roadmap for 2025

So, you want to become a full stack developer? Excellent choice! Full...

Guides  metagiik3 Mins read

metagiik3 Mins read

What Is Full Stack Programming? A Beginner’s Guide to the Modern Web

What is full stack programming? This beginner's guide explains front-end, back-end, databases,...

Leave a comment